Topics of interest

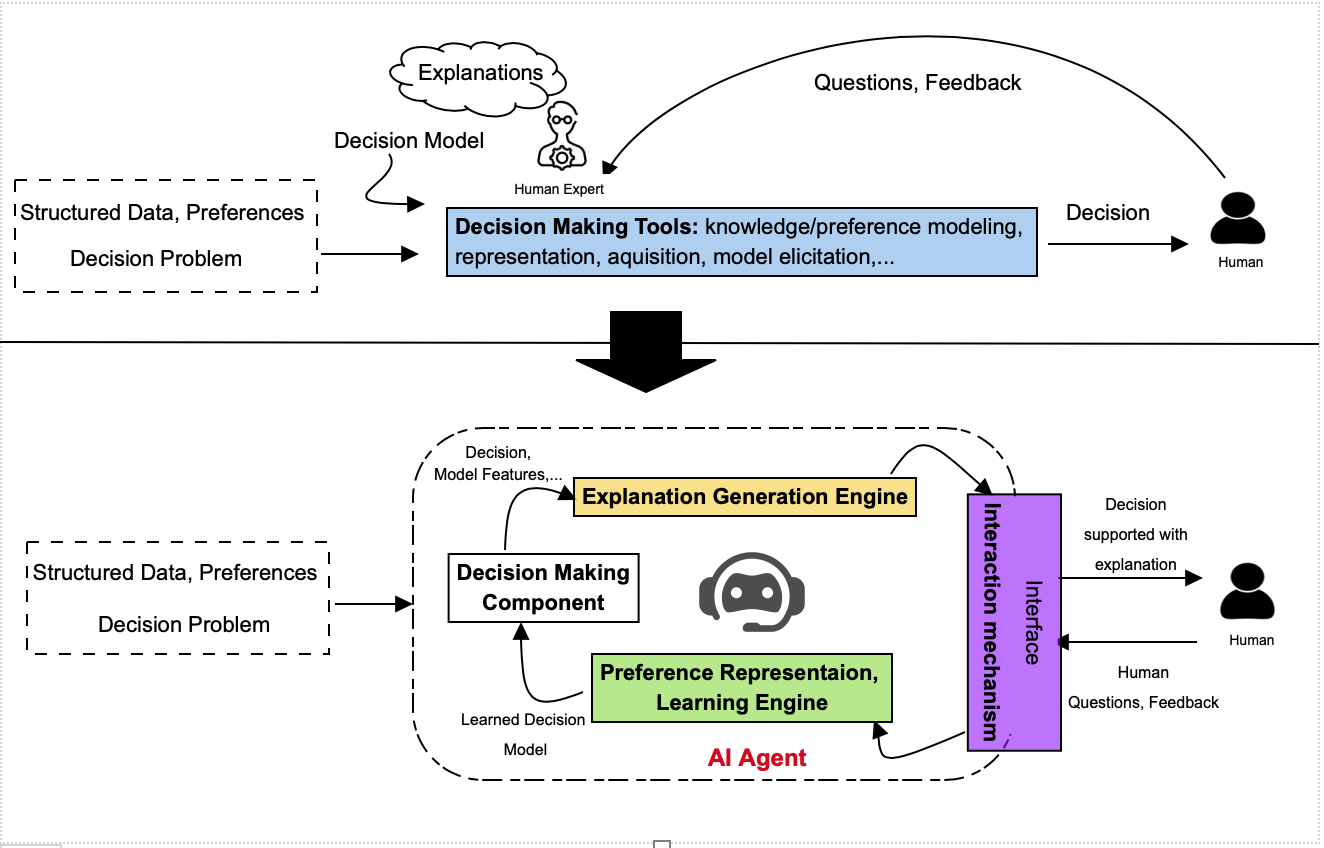

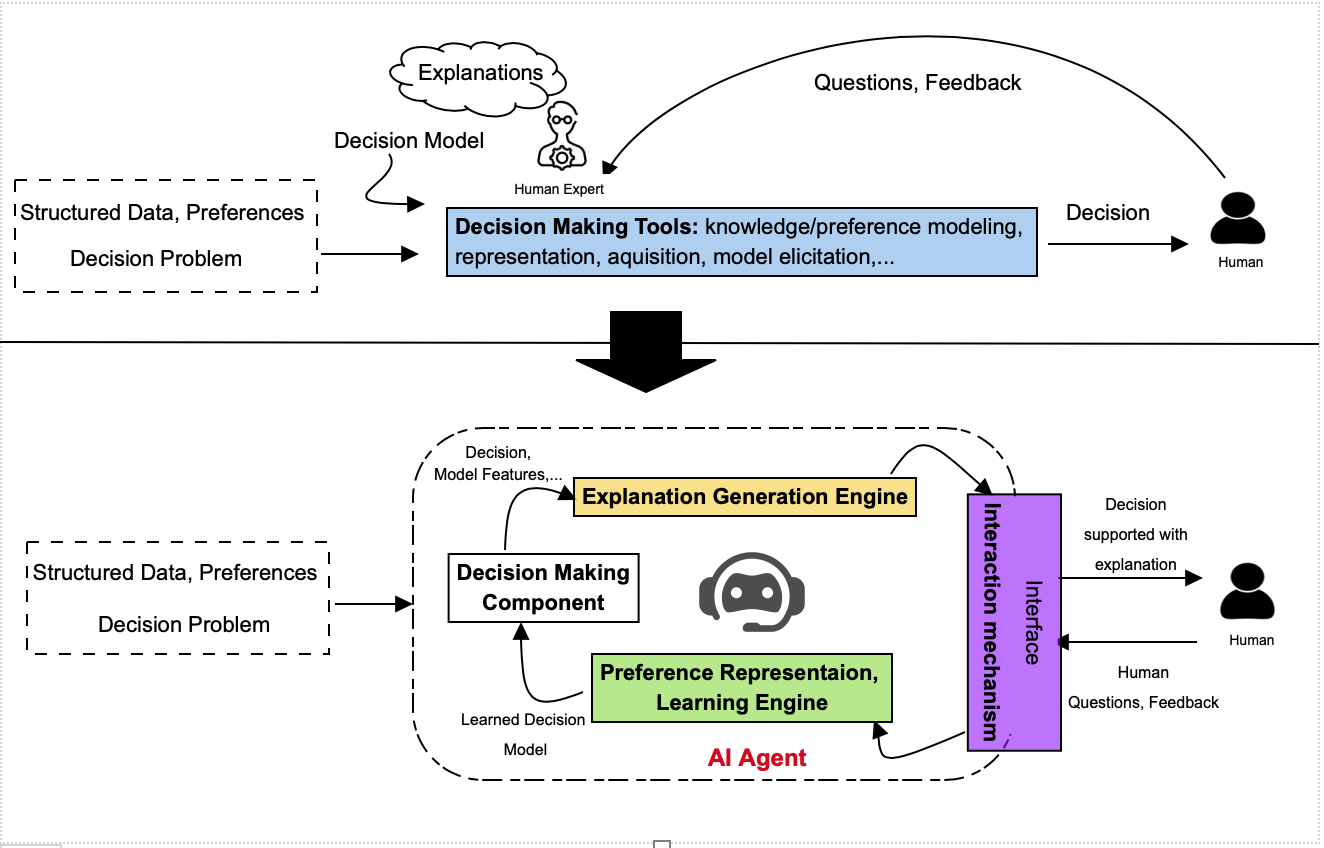

Decision aiding is the result of an interaction between an analyst and a decision maker, where the aim of the analyst is to guide the decision maker in building and understanding the recommendations of a particular decision problem. Nowadays, decision aiding situations are pervasive: they can occur in situations where the role of the analyst is taken by a non-expert, even in some extreme case by an artificial agent. This means that several aspects -- such as learning the preferences, structuring the interaction, providing an explanation, handling the user feed-back, …-- usually delegated to the human analyst should be ideally handled by the artificial agent. Thus, we need on the one hand some formal theory about preferences and on the other hand formal language enabling to represent the dialogue and, explain and communicate its outcomes, in order to convince the user that what is happening is both theoretically sound and operationally reasonable.

My main research interests include multiple criteria decision analysis, argumentation theory, Artificial Intelligence and decision aiding process. More precisely, it concerns:

My main research interests include multiple criteria decision analysis, argumentation theory, Artificial Intelligence and decision aiding process. More precisely, it concerns:

Adaptive Explanation of Algorithmic Decisions

The ability to provide explanations along with recommended decisions to the user is a key feature of decision-aiding tools. Roughly, speaking, the aim is to increase the user's acceptance of the recommended choice, by providing supporting evidence that this choice is justified. One of the difficulty of this question lies on the fact that the relevant concept

of an explanation may be different, depending on the problem at hand and on the targeted audience. The main challenges are:

Dealing with the variety of decision models. Often a system designs a single explanation strategy, dedicated to the decision model implemented by the system. Our ambition is to provide a catalogue of explanation options that can be used in different contexts (supported by the existence of a large panel of multiple criteria decision models)

Dealing with the variety of users' profiles. Each of the explanation option mentioned in the previous point can be filtered so as to match a specific user profile. The idea of tailoring explanations (e.g. finding the good level of abstraction) for a given user is not new in itself, but the idea is to provide a unique catalogue, depending on the decision model.

Models for adaptive interaction

In the classical flow envisioned in decision-support systems, three phases are sequentially identified: elicitation, recommendation, and finally explanation. The aim is to provide an integrated model where explanations can be provided during the elicitation process. Such explanations may be partial, and may be used to guide the elicitation, provided the user is convinced by the justification of the system. The main issues are: i) how to design the interaction between a system and a human decision maker (dialogue game protocol), and ii) How to adapt classical elicitation algorithms that can cope with inconsistent user feedback, by automatically adjusting the model to the preference information provided by the user?

Designing and applying decision aiding methodology

Through the previous points our ambition is to get solid theoretical frameworks.

Beyond this, we wish to prove the usefulness and the applicability of our proposals through

real situations. The aim is to use methodologies and tools based on MCDA

(Multiple criteria Decision Aiding) and AI to model and solve real decision problems.